The “Clean Data First” Trap: A Delaying Tactic

If you’re leading digital transformation in a mid-size enterprise, you already know the refrain: “We’ll do AI once our data is clean.” It sounds sensible—prudent, even—but it’s a pervasive trap. The reality is, perfect data never arrives. Data is a living, breathing entity within an organization, constantly being created, updated, and sometimes, corrupted. Waiting for absolute data perfection isn’t a strategy; it’s a delaying tactic that postpones critical learning and stifles early value creation, leaving you behind competitors who are already experimenting and iterating.

As Domo’s research warns, the fundamental truth holds:

“Poor quality data translates to poor quality AI models and poor results. If your data is biased, inaccurate, incomplete, or outdated, your models will reflect that data and potentially amplify those issues when making predictions or decisions.” – IBM Blog [link]

This statement is indisputable: bad data does cause bad AI. However, this critical insight doesn’t mean you must achieve immaculate data before engaging with AI. Instead, it prompts a more strategic question: What if you could use AI to help solve your data problem itself?

Quotable One-Liner: “Perfect data never arrives. Waiting for it just delays learning and value creation, allowing competitors to pull ahead.”

Why the Pursuit of Perfection Fails Your AI Ambitions

The classic data quality project is often an endless, Sisyphean task. Teams embark on monumental efforts to clean, migrate, and reconcile historical data, only to find that after months or even years, the original business context has shifted, new data sources have emerged, or the core problem itself has evolved. This constant chase for an elusive ideal becomes a significant opportunity cost. Meanwhile, agile competitors are deploying AI, gathering insights, and learning at an accelerating pace.

The goal isn’t immediate perfection, but rather a trajectory of continuous improvement.

“High-quality data doesn’t just improve results—it makes AI trustworthy, scalable, and truly valuable.”

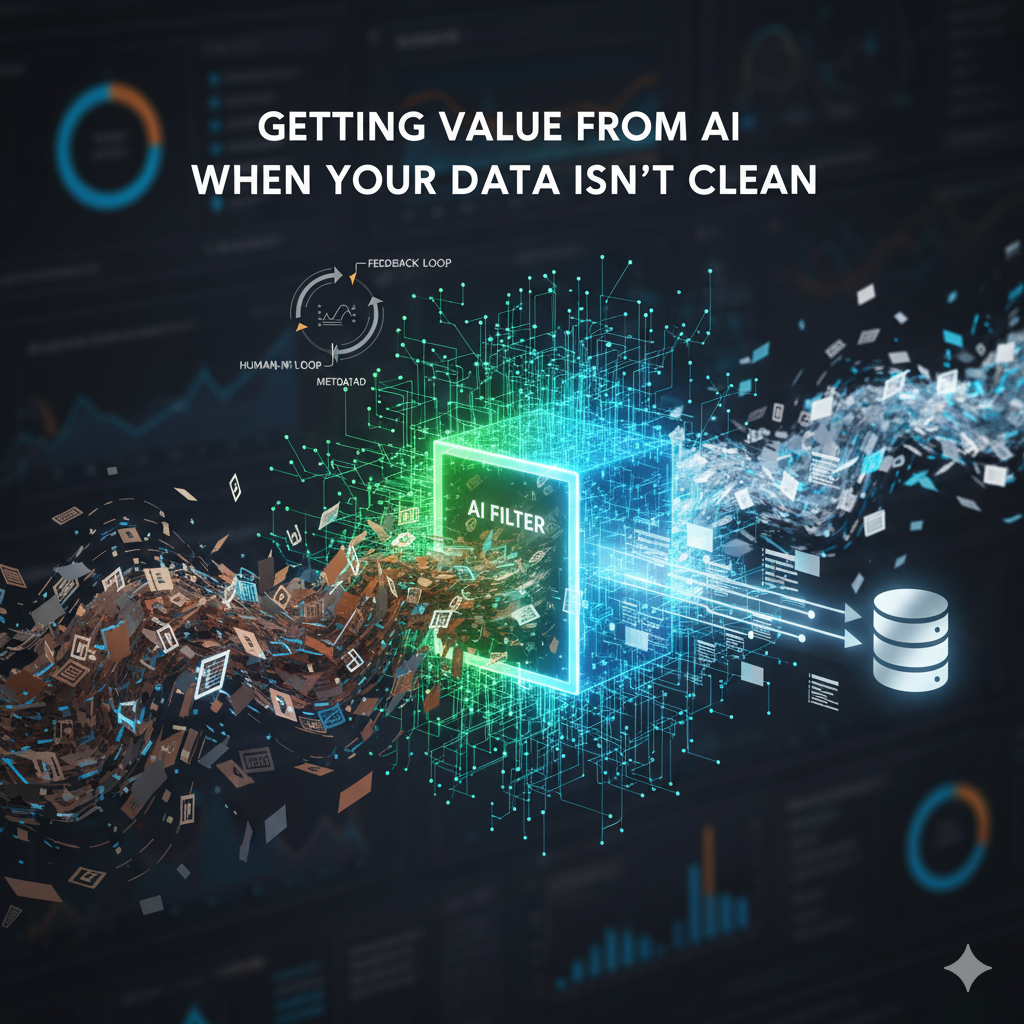

The good news is that modern AI architectures and methodologies allow enterprises to start earlier than ever before. Techniques like Retrieval-Augmented Generation (RAG), embeddings, and robust feedback-loop design empower AI to work effectively with your current data, iteratively improving its performance and the underlying data quality as it’s used. This paradigm shift means AI isn’t just a consumer of clean data; it can be a catalyst for achieving it.

Three Practical AI Use Cases for Working with Messy Data

Instead of grand, all-encompassing data initiatives, consider these targeted AI applications that derive value even when your data isn’t pristine:

Retrieval-Augmented Search & Q&A (RAG-based):

What it is: Instead of needing to integrate and perfectly structure every disparate data source into a single master database, RAG allows AI to dynamically retrieve relevant information from raw, unstructured documents and databases at the time of query.

How it helps messy data: The AI model uses these retrieved snippets to formulate grounded, accurate answers without ever needing a comprehensive, cleansed dataset. It “reads” and understands the context from the messy sources, mitigating the need for perfect upfront integration.

Benefit: The model stays grounded and factual, leveraging existing information effectively without the huge overhead of traditional data warehousing.

Human-in-the-Loop (HITL) Copilots for Data Curation:

What it is: Deploy AI assistants that work alongside human experts, automating repetitive tasks while flagging anomalies for human review.

How it helps messy data: These copilots can be designed to identify inconsistencies, missing fields, or potential duplicate entries within your existing data. For example, a copilot could highlight two slightly different customer addresses and ask a human to confirm which is correct.

Benefit: Each human correction or validation becomes a valuable “labelled data point,” directly improving the underlying data quality and simultaneously training the AI for better future performance.

Narrow Domain Copilots (Specialized Automation):

What it is: Focus AI on a single, well-defined process with specific, albeit potentially messy, data.

How it helps messy data: By restricting the scope—for instance, to invoice matching, warranty lookup, or customer support ticket routing—the copilot can quickly learn the quirks and common patterns of that particular dataset. It surfaces gaps, discrepancies, or missing information within its narrow domain automatically.

Benefit: This approach provides immediate, measurable value in a controlled environment, proving AI’s utility and building trust without tackling enterprise-wide data chaos. It offers a scalable blueprint for future expansion.

“Start where your data is—AI can help clean it as it learns, turning every interaction into an improvement.”

Building Feedback Loops that Elevate Data Quality

The true magic of using AI with imperfect data lies in engineering effective feedback loops. Every AI interaction, every user query, and every human correction can generate valuable metadata. This metadata provides critical insights: which data sources were most reliable, which AI answers required human intervention, or which records were consistently problematic.

By feeding this intelligence back into your data pipelines and quality processes, you create a powerful virtuous cycle: AI usage actively improves data consistency over time. Consider adding a confidence indicator to AI outputs—a simple green, amber, or red light—to visually signal reliability to users. This transparency encourages users to contribute corrections, fostering a collaborative environment where data quality continuously improves. This user engagement is key to driving adoption and trust.

“Every AI interaction can be a data improvement cycle: deploy, observe, refine, and govern.”

Balancing Pragmatism with Essential Governance

Even early, targeted AI deployments must operate within a clear framework of privacy, security, and compliance. While the goal is not perfection, it is certainly not recklessness. Keep your governance framework light but explicit to prevent potential risks and avoid the proliferation of uncontrolled “shadow AI” initiatives later on.

Key governance considerations include:

Track Data Sources and Access Policies: Clearly document where data is coming from and who has access, both for the AI and the underlying data.

Store Prompts and Outputs for Audit: Maintain a log of inputs provided to the AI and the outputs it generates for traceability and accountability.

Define Roles for Review and Escalation: Establish clear responsibilities for monitoring AI performance, reviewing flagged data issues, and escalating critical problems.

Proactive, pragmatic governance ensures that even iterative AI development contributes to a secure and compliant future, fostering long-term trust and sustainable adoption.

“Governance done early prevents ‘shadow AI’ later, ensuring trust and compliant innovation.”

In Summary: Don’t Wait, Iterate with Intelligence

The journey to getting value from AI isn’t about waiting for an impossible ideal of perfect data. It’s about setting the right boundaries, embracing a learning mindset, and deploying AI strategically to address specific pain points. Let AI expose the gaps in your data, involve humans actively in the loop, and you will witness both data quality and organizational trust improve with every cycle.

According to Enterprise Sight, the fastest path to AI maturity for mid-size enterprises is to deploy, observe, refine, and govern. This iterative approach transforms data challenges into opportunities for continuous improvement and tangible value. Explore practical frameworks for structured AI adoption in the [Data Governance Starter] and [ESML Framework] sections at enterprisesight.com.