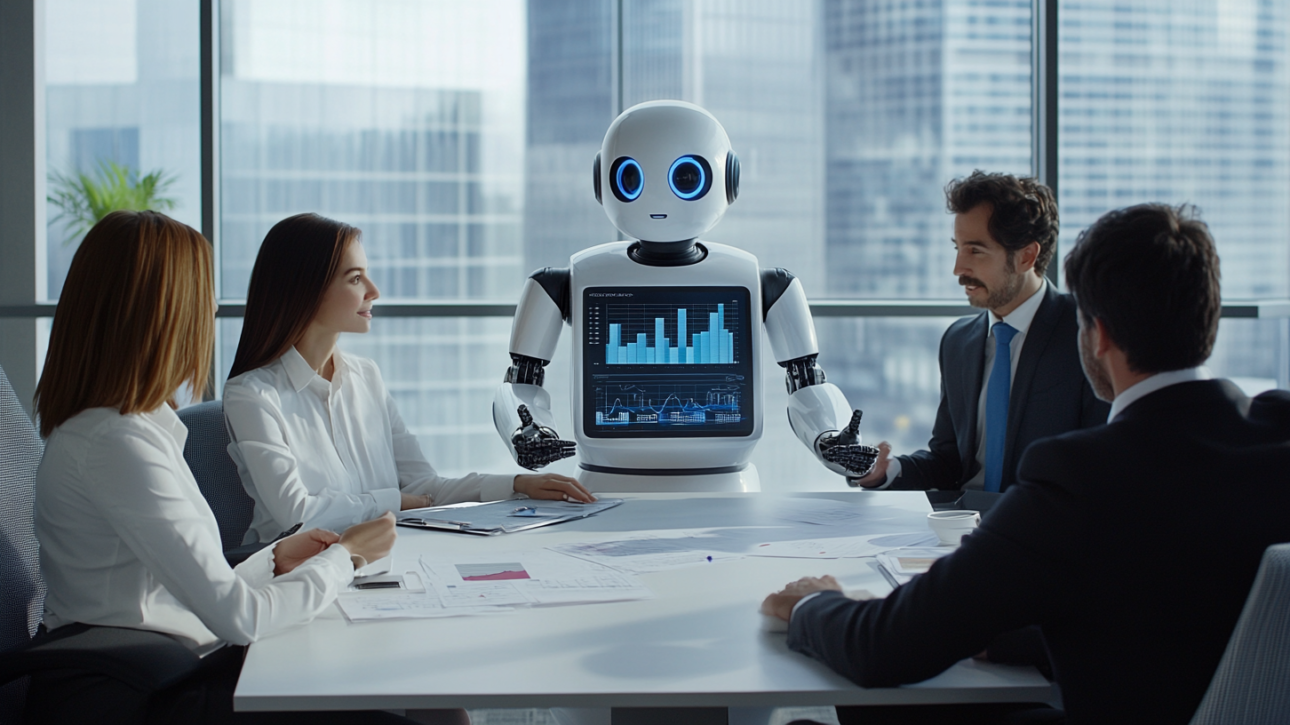

Artificial Intelligence has been around for decades, but in the past few years, excitement around it has surged. AI Chatbots are carrying on human-like conversations, AI is generating art, and AI models are predicting what you will buy before you even know you want it. Businesses see the massive potential of AI and are naturally…